In this document, the first part of the results of our ongoing research in to LLMs, we examine the potential for large language models to produce malware. Our focus is on open source models as these lend themselves more easily to malicious activities (logging in to ChatGPT and trying to write ransomware is probably bad OpSec).

Large language models (LLMs) provide an interesting opportunity for software development with the existence of AI pair programmers (SourceGraph Cody, GitHub Copilot) and LLMs built specifically for solving code problems. “Work smarter, not harder” is a paradigm to which the domain of these pieces of software apply, and criminal/malicious activity is no exception – take “WormGPT”, a (subpar) paid GPT knockoff that aims at specifically aiding in malicious activity. The future is now with AI, and adversaries are catching up – we should be too.

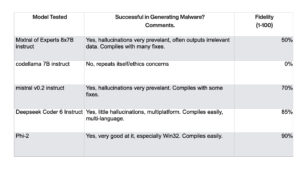

An overview of the results in shown in the table below.

Download the document to read more.